Here’s one for the strange interactions file.

I have a client that is using FreeNAS for some high speed cheap storage using SuperMicro servers and SSDs. There was some discussion about using Open ZFS on Linux, but the FreeNAS UI is a lot more convenient for the operators, and the BSD/ZFS combination has always been solid. These are your standard 2U servers built with 24 2.5” slots, using the (very nice) LSI-3008 with the IT firmware in JBOD mode.

The setup is a primary server starting with 7 2 Tb SSDs (raidz on 6 plus a hot spare), and to keep the costs down, a backup with 7 Seagate hybrid 2 Tb drives that will receive hourly ZFS snapshots send/recv.

So the initial installations went fine, but then for some strange reason the server with the hybrid drives would hang on boot trying to load the filesystem. They tried to reinstall but for some reason, even the installer was hanging at the same spot with the message:

Trying to mount root from cd9660:/dev/iso9660/FreeNAS

I started off thinking that this was probably something in the BIOS configuration getting confused about the various UEFI vs BIOS options mixed with some incompatibility with the XHCI hand-off on the USB key that they were using to install it.

So I spent a few hours in the systematic trial and error mode of changing the various BIOS configurations around those settings and various different USB keys with no luck. At one point, I noticed that the USB key was mounting as da7 (BSD speak for /dev/disk7), so I thought that I would retry the install without any of the internal storage so that it would boot as da1 (even though this shouldn’t make any difference). Getting ready to do this, I leaned over and started ejecting the disks in the server since they’re hot swappable and watching the system messages about the disks disappearing from the SAS bus. But as soon as the last disk disappeared, the boot process picked up, mounted the root volume and started the installer.

Strange to put it mildly. These disks had been “formatted” in their ZFS pool, but nothing else and had never been involved in a boot or install process and the boot option had been disabled on the LSI-3008. But the install went just fine onto the two SSDs in the back, and then the machine booted up just fine so I walked through some basic configuration steps, inserted the drives and imported the zpool. All fine and good.

Then I went to test the restart again, and immediately ran up against the same hang in the boot process only this time it was trying to mount root from the zfs file system on the mirrored SSDs. WTF?

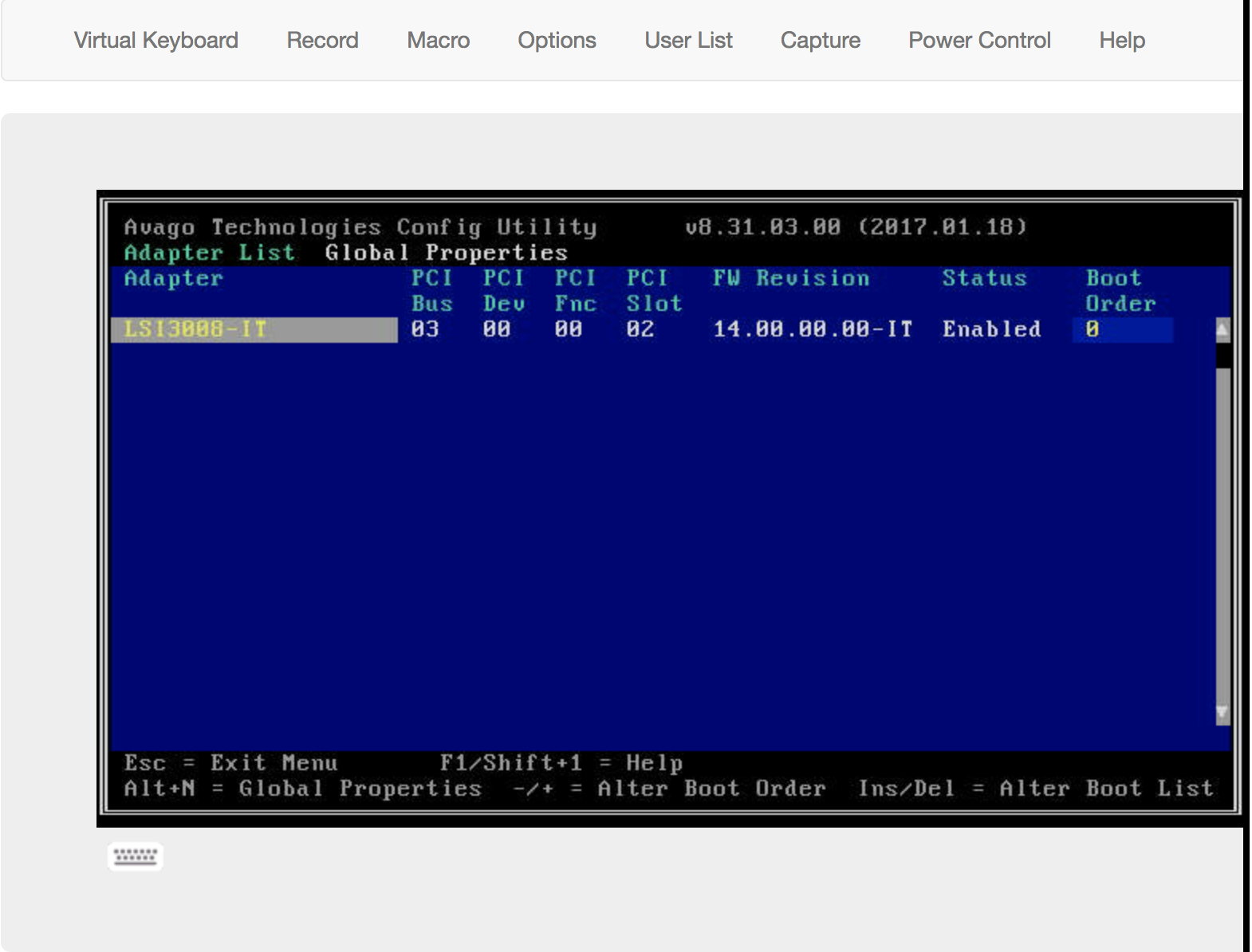

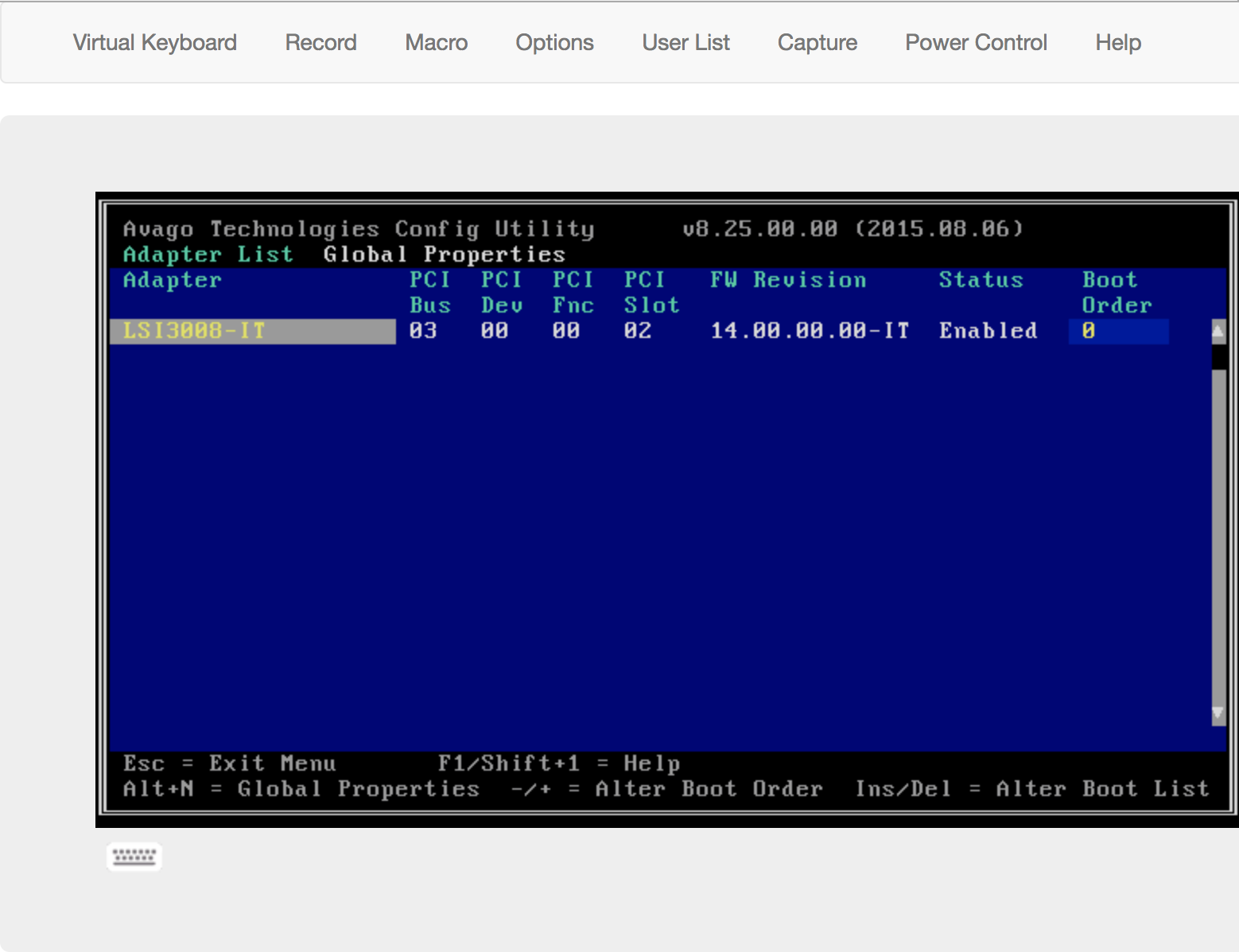

Like the install process, all it took to unblock the situation was to physically eject the storage disks and it picked right up. However, this is really not a practical solution for a server living in a rack in a datacenter. So at this point, I’ve gone over every setting in the BIOS on the two servers and they’re identical in every pertinent respect. So on the two ostensibly identical servers, I start to look more closely at the LSI-3008 and discover something that had slipped by earlier: while the MPT firmware revision is the same on both cards at 14.00.00.00, up in top section there’s another firmware notice in a much more subtle low contrast grey that shows a version dating back to 2015. A quick check on the other server shows it’s running something from 2017.

Good:

Bad:

Then it’s the usual song and dance of finding the firmware update in the morass of nonsense provided by Broadcom (purchasers of Avago/LSI) who insist on the serial number of the card which is inside a server on the workbench with 4 other servers and switches piled on top. Nowhere in the BIOS utility did I find the serial number. Finally a lucky google search showed a link to the driver and firmware in a repository at Supermicro where I get it via ftp (ftp! In 2017!). Now it’s off to figure out how build a DOS boot disk from a Mac or a Windows 10 machine. Answer: Rufus who still offers a FreeDOS option.

The first USB keys take the FreeDOS install but aren’t bootable on the server, but finally SANDisk comes through with one that works out of the bag of keys. With the firmware updated, everything works properly again.

Now the puzzling interaction here is that the initial installation worked just fine with the disks in the server. But at that stage they were either completely unformatted or probably with an MBR partition. When ZFS claims a disk it puts down a GPT partition table. So it seems that the old firmware had some kind of strange bug dealing with the presence of GPT disks.

Moral of the story: always update the firmware on all of the components in your systems. Weird things will still happen, but you might be able to avoid some strange time wasters.