I’m working on an interesting little installation where a client wants to consolidate a number of small, independent Minio instances on a TrueNAS SCALE box. In the current version of TrueNAS we now have access to being able to run Docker containers and prepackaged containers in Apps so this should be easy, right?

The basics are all straightforward and well documented. Using the built-in App catalogue I can easily deploy a number of Minio instances, each with their own assigned storage space without any difficulties.

The catch? Well, they are containers so they all share the same IP on the interface that Kubernetes is hooked up to, so I need to assign each one specific port numbers for the Minio service and web UI. But I don’t want to expose this to the end users. I want them to have a unique FQDN per instance that runs of port 443.

This is a solved problem by using a reverse proxy in front of the Minio instances and using virtual hosts to map an FQDN to a given IP and port number. The Minio documentation is pretty clear on how to set all of this up as well (and I’ve done this a number of times before).

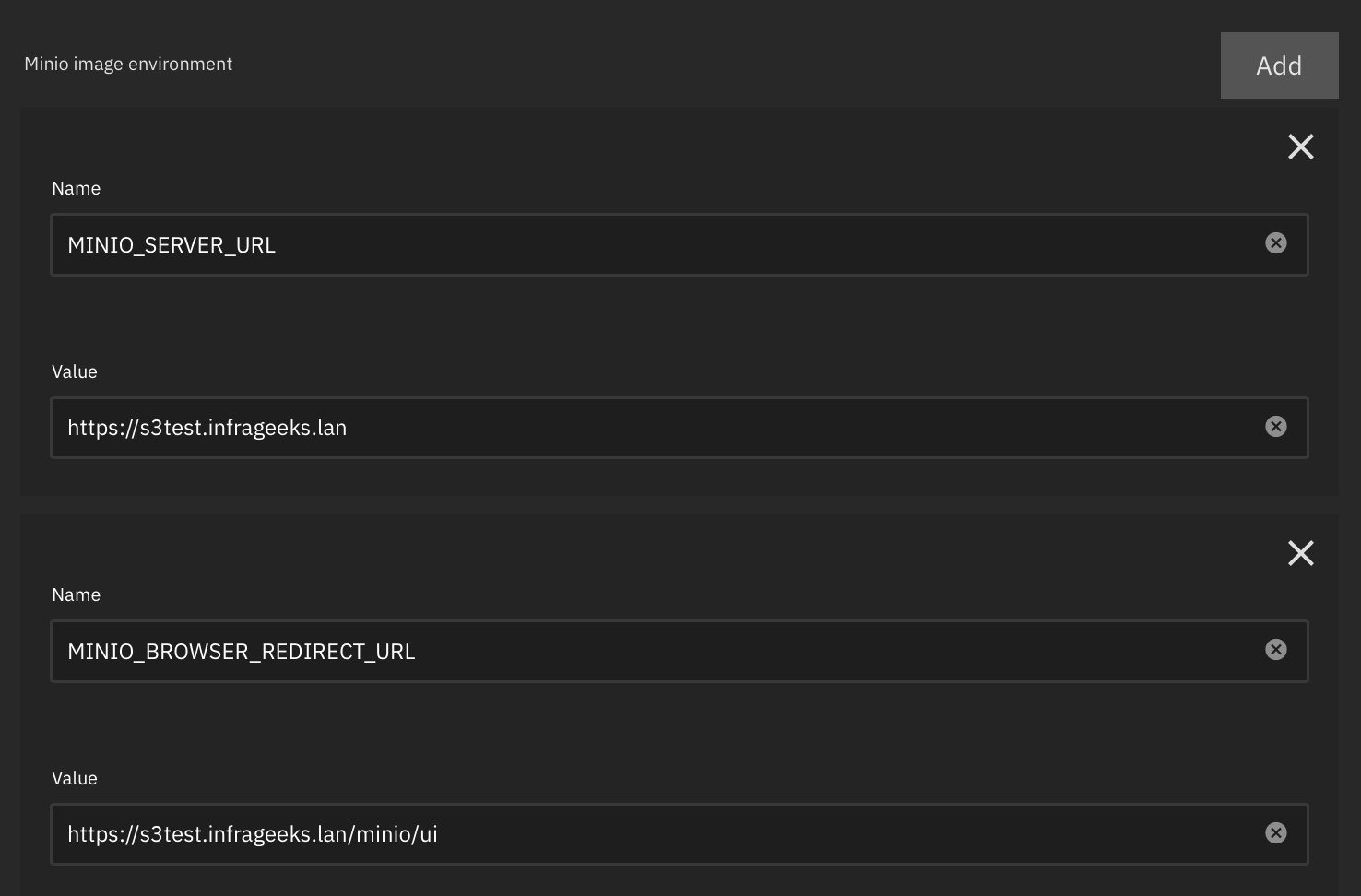

This consists of adding a couple of environment variables that reference the URL of the reverse proxy:

-

MINIO_SERVER_URL : the URL of the proxy address where I do the TLS termination

-

MINIO_BROWSER_REDIRECT_URL : the URL of the proxy with a path that will map to the browser interface

This works just fine when the reverse proxy is external to the TrueNAS server. However, if you try to do this with a VM or a Docker container, there’s a strange little quirk in the Kubernetes networking configuration that will cause manually launched Docker containers and VMs from communicating with the TrueNAS IP.

The first thing that needs to be done is to create a bridge interface rather than using the physical interface, but the key factor is to not configure the bridge for the Kubernetes apps, but only for the VM or Docker container.

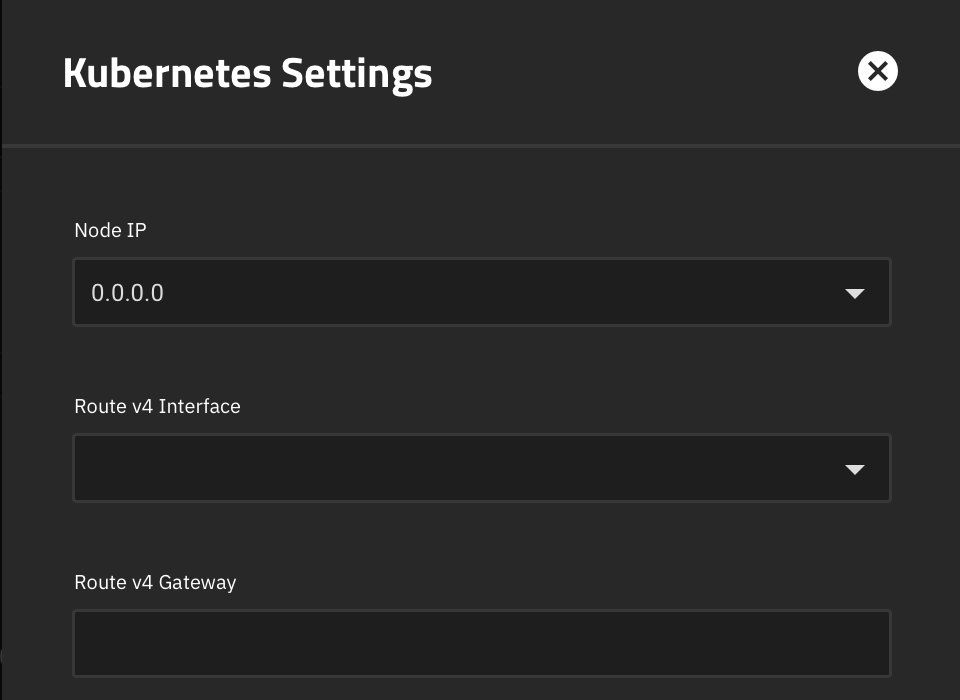

Under Apps > Settings > Advanced Settings the fields for interface and Route v4 gateway must be empty. If you’re like me, you set these values when setting up Kubernetes as they seem to be something you’d need. But no - they need to be empty to permit communications with the host.

So with that configured, I deployed the little app called NGINX Proxy Manager as a Docker container without doing any port mapping on a dedicated IP address on the bridge interface. From there it’s just a matter of setting up the proxy configuration in the web interface of the app which is dead simple and also handles convenient stuff like easy import of certificates or using Let’s Encrypt certificates.